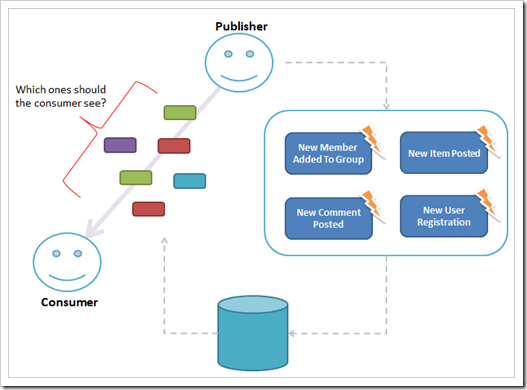

In this article I want to discuss some of the basics of Bundling and also to outline some thoughts that I have for organizing scripts and code when composing views in your application.

MVC4 Bundling Primer

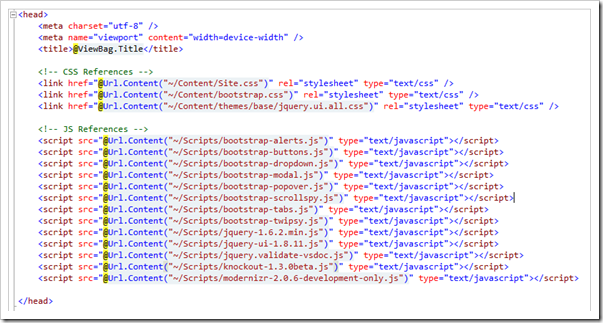

The Bundling features allows you to easily combine and minify resources within your application. Take for example an application which contains the following Javascript files:In the ‘bad old days’ we may have emitted each of those script files separately, this means that the client would have to make lots of requests to pull down the content.

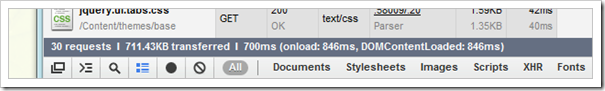

Emitting the files as above would result in the following network traffic on the client:

As you can see at the bottom of the image, 30 separate requests have been issued.

Default Bundle Behaviour

Using Bundling is as simple as registering the files that we want to optimize with the BundlingTables feature. To achieve that, we can pretty much add the following line of code to Application_Start in Global.asax to register all of the files shown above:This default behaviour will register all of the .js and .css files located in their default locations and create routes so that they can be served up by the application. Rendering them out in the page is then as simple as pointing a script tag as the route that has been registered to display them – in the default case, that would result in the following script tag being added to the bottom of your main layout file:BundleTable.Bundles.EnableDefaultBundles();

<script src="@Url.Content("~/Scripts/js")" type="text/javascript"></script>

Dynamic Bundles

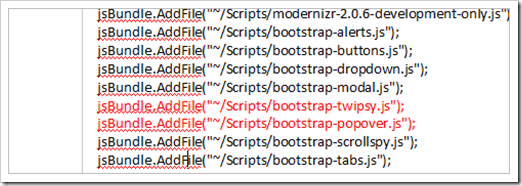

For finer-grained control over the ordering of resources within a Bundle you can create a dynamic bundle and manually add files in the order that you want them to be rendered.And then, in the page, emit the path that was just registered:Bundle bundle = new Bundle("~/CoreBundle", typeof(JsMinify)); bundle.AddFile("~/Scripts/jquery-1.7.1.min.js"); bundle.AddFile("~/Scripts/jquery-ui-1.8.16.js"); bundle.AddFile("~/Scripts/jquery.validate-vsdoc.js"); ... BundleTable.Bundles.Add(bundle);

<script src="@Url.Content("~/CoreBundle")" type="text/javascript"></script>Note: I needed to register the Twitter Bootstrap scripts dynamically like this because I was getting errors when using the Default registration. The errors resulted because of the order that the default registration behaviour was adding the scripts to the bundle. In the case of Twitter Bootstrap, I needed to order the scripts specifically because of dependencies beteen Twipsy.js and Popover.js

File Organization for child views

So far we have seen how to register all of the core scripts for our application but, as our application grows, we will typically introduce lots of individual scripts to manage the behaviour of specific pages. In my case, I adopt a convention where I separate all of the Javascript and CSS code out of the View files and into a CSS/JS file with a name which mirrors the view that they represent. The following Table illustrates how this mapping works:| Resource Location | JS Location |

| Home/Index.cshtml | Scripts/Views/Home_Index.js |

| Shared/ChildView.cshtml | Scripts/Views/ChildView.js |

To give a concrete example of how this works, let’s consider that the shared partial view listed in the above table contained the following code which allows a user to click on a link:

The corresponding JS file would have behavioural code to handle the click event of the anchor tag like so:<h2>Child Content</h2> <a href="#" id="clickerLink">Click Me</a>

When it comes to rendering the output to the browser it will be desirable to have the JS emitted as part of the Core Bundle thus allowing us to have all scripts combined, minified, and in one optimal location at the bottom of the page.$(function () { $("#clickerLink").click(function (e) { e.preventDefault(); alert($(this).text()); }); });

To achieve this desired outcome we simply register the child script from the partial view that depends on it like so:

@{

var bundle = Microsoft.Web.Optimization.BundleTable.Bundles.GetRegisteredBundles()

.Where(b => b.TransformType == typeof(Microsoft.Web.Optimization.JsMinify))

.First();

bundle.AddFile("~/Scripts/Views/ChildContent.js");

}

For the sake of completeness, you would probably abstract that messy BundleTable logic out into a helper which would reduce your child registration code down to the following line of code:Html.AddJavaScriptBundleFile("~/Scripts/Views/ChildContent.js", typeof(Microsoft.Web.Optimization.JsMinify));

And here's some example code for what the extension class might look like: public static class HtmlHelperExtensions { public static void AddJavaScriptBundleFile(this HtmlHelper helper, string path, Type type) { AddBundleFile(helper, path, type, 100); } /// <param name="index"> /// Added this overload to cater for switching between /// different Script optimizers more easily /// e.g. Switching between Microsoft.Web.Optimization /// bundles and ClientLibrary resources could /// be done seamlessly to the application /// </param> public static void AddBundleFile(this HtmlHelper helper, string path, Type type, int index) { var bundle = BundleTable.Bundles.GetRegisteredBundles() .Where(b => b.TransformType == type) .First(); bundle.AddFile(path); } }